Federated Learning: A Comprehensive Overview

Have you ever wondered how multiple devices can collaborate to learn a shared task without sharing their data directly?

Federated Learning offers a unique solution to this intriguing challenge.

As you explore this comprehensive overview, you will uncover the inner workings of this collaborative learning approach, discover its benefits and applications across various industries, and delve into the algorithms and frameworks that make it all possible.

So, are you ready to unravel the mysteries of Federated Learning and its potential impact on the future of machine learning?

Key Takeaways

- Federated learning offers enhanced data privacy, improved performance, and decentralized collaboration.

- Challenges include data privacy concerns, secure model updates, and real-world implementation obstacles.

- Applications span healthcare, finance, retail, telecommunications, and manufacturing for diverse industry benefits.

- Optimization strategies, efficient frameworks, resource management, and performance evaluations drive successful federated learning outcomes.

What Is Federated Learning?

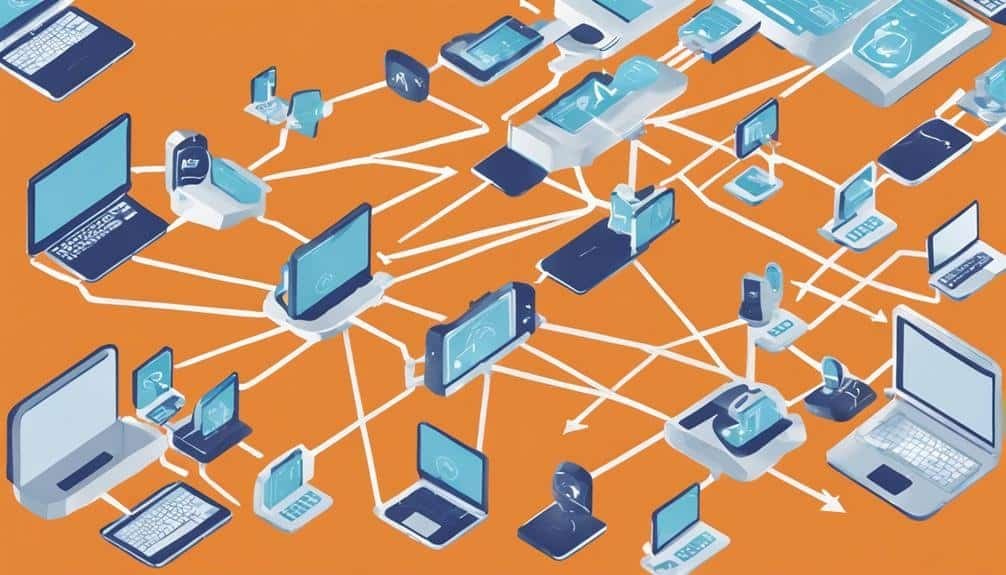

Federated learning is a decentralized machine learning approach that allows multiple parties to collaboratively train a shared model while keeping their data locally stored and private. This method addresses the challenges posed by centralized machine learning models that require pooling data into one location, which could lead to privacy concerns and data security risks. In federated learning, each party retains control over its data, enabling organizations to work together on model training without sharing sensitive information. This decentralized approach is particularly valuable in scenarios where data can't be easily centralized due to legal restrictions, data sensitivity, or sheer volume.

Decentralized networks play a vital role in federated learning by establishing a secure communication channel between the different entities involved in the model training process. These networks facilitate the exchange of model updates and aggregated learnings without compromising the individual datasets. By distributing the learning process across multiple devices or servers, federated learning enables organizations to collaborate on improving the shared model without exposing raw data to unauthorized parties. This framework not only enhances privacy protection but also promotes cooperation in environments where data sharing is limited or prohibited.

Benefits of Federated Learning

Utilizing federated learning in machine learning workflows offers a multitude of advantages that enhance data privacy and collaboration among multiple entities. One key benefit is the performance improvements achieved through federated learning. By allowing models to be trained locally on distributed data, federated learning leverages data distribution across devices or servers, enabling the creation of more robust and accurate models without the need to centralize data. This approach not only enhances data privacy but also results in better model performance since the models are trained on a more diverse set of data.

Another significant advantage is the resource efficiency that federated learning brings to the table. With traditional centralized machine learning approaches, massive amounts of data need to be transmitted to a central server for processing, requiring substantial bandwidth and computational resources. In contrast, federated learning minimizes the need for data movement by training models locally on each device or server, significantly reducing the burden on network infrastructure and computational resources.

Moreover, federated learning contributes to improved model convergence. By aggregating local model updates from multiple devices or servers, federated learning can achieve faster convergence to a global model that encapsulates knowledge from all participating entities. This collaborative learning process enhances the overall model performance and accelerates the convergence of models compared to traditional centralized training methods.

Challenges in Federated Learning

You need to address the challenges posed by data privacy concerns and the complexities of communication among devices in federated learning.

These challenges can hinder the seamless aggregation and sharing of model updates across multiple devices securely and efficiently.

Finding innovative solutions to overcome these obstacles is crucial for the successful implementation of federated learning in real-world applications.

Data Privacy Concerns

Privacy preservation remains a critical challenge in the landscape of federated learning, requiring innovative solutions to ensure secure data transmission and storage.

Data ownership and user consent are pivotal in addressing these concerns. In federated learning, each user retains ownership of their data, which remains on their device, safeguarding individual privacy. User consent plays a crucial role in determining which data can be utilized for model training, ensuring that sensitive information isn't compromised.

Implementing robust encryption techniques and secure aggregation protocols can further enhance data privacy in federated learning. By prioritizing these aspects, federated learning can continue to advance while upholding the highest standards of data protection and privacy.

Communication Among Devices

Effective communication among devices in federated learning poses a significant challenge due to the decentralized nature of the model training process. Here are some key challenges in this area:

- Device Synchronization: Ensuring that all devices are aligned in terms of model updates and parameters is crucial for accurate training.

- Data Transmission: The efficient and secure transfer of model updates and aggregated information between devices is essential for the federated learning process.

- Cross Device Communication: Facilitating seamless communication between different devices while maintaining data privacy and security can be complex.

- Network Connectivity: Reliable network connections are required for timely and accurate data exchange among devices, influencing the overall performance of federated learning.

How Federated Learning Works

To understand how Federated Learning works, consider its key components:

- Data Privacy Protection

- Collaborative Model Training

- Decentralized Learning Approach

By safeguarding data privacy, Federated Learning allows multiple parties to collaborate on model training without sharing sensitive information.

This decentralized approach empowers devices to learn locally and enhances the collective intelligence of the network without compromising individual privacy.

Data Privacy Protection

In the realm of Federated Learning, safeguarding data privacy is a paramount concern that necessitates innovative solutions to ensure secure collaboration among multiple parties.

- Data encryption: Implementing robust encryption protocols helps protect sensitive data during transmission and storage.

- User consent: Obtaining explicit consent from users ensures that their data is used only for the intended purposes, fostering trust in the federated learning process.

- Anonymization techniques: Employing effective anonymization methods helps prevent the identification of individual data contributors, maintaining anonymity and confidentiality.

- Legal compliance: Adhering to relevant data protection regulations and privacy laws is crucial to avoid potential legal ramifications and uphold the rights of data subjects.

Collaborative Model Training

Collaboratively training models in Federated Learning involves multiple parties sharing insights from their local datasets to collectively improve the global model's performance. This collaborative optimization method allows each party to update the model using its local data, preserving privacy.

After local training, the updated model parameters are sent to a central server for model aggregation. During model aggregation, the server combines these parameters to create an improved global model. This iterative process of local training and model aggregation helps in achieving a model that performs well across all local datasets without compromising data privacy.

Collaborative model training in Federated Learning ensures that the global model's performance benefits from the diverse insights of all participating parties, making it a powerful and privacy-preserving approach.

Decentralized Learning Approach

Employing a distributed approach, Federated Learning operates by allowing multiple devices to collaboratively train a shared model without the need to centralize data.

- Decentralized Networks: In this setup, data remains on local devices, ensuring privacy and security.

- Privacy Preserving Algorithms: Techniques like differential privacy are utilized to aggregate local updates while preserving individual user data privacy.

- Secure Communication: Encrypted channels are employed to transmit model updates between devices and the central server.

- Local Model Training: Each device trains a local model using its data, and only the model updates are shared, ensuring data privacy is maintained.

Applications in Various Industries

Applications of federated learning span across diverse industries, showcasing its versatility and potential for revolutionizing data collaboration. This decentralized learning approach allows organizations to harness insights from distributed datasets without the need to centralize data. Here are some industry-specific applications and use cases:

| Industry | Application |

|---|---|

| Healthcare | Predictive maintenance for medical equipment based on usage patterns. |

| Finance | Fraud detection across multiple financial institutions while keeping sensitive data secure. |

| Retail | Personalized recommendation systems for customers without sharing individual shopping histories. |

| Telecommunications | Network optimization by analyzing data from various service providers collectively. |

| Manufacturing | Quality control improvements by aggregating data from different production facilities. |

In healthcare, federated learning enables the development of predictive maintenance models for medical equipment by analyzing usage patterns from different hospitals without compromising patient privacy. Financial institutions benefit from federated learning by jointly training fraud detection models on decentralized data to enhance security without sharing sensitive information. Retail companies leverage this technology to create personalized recommendations for customers based on collaborative analysis of purchase behaviors across various stores. Telecommunications companies optimize network performance by combining data from multiple service providers through federated learning. In manufacturing, federated learning aids in improving quality control processes by aggregating data from diverse production facilities to identify common issues and implement solutions efficiently.

Privacy Concerns in Federated Learning

You must acknowledge that in federated learning, data privacy risks are a paramount concern.

Implementing robust security measures is essential to safeguard sensitive information and maintain user trust.

Ensuring compliance with data protection regulations is crucial for the success and sustainability of federated learning models.

Data Privacy Risks

Data privacy risks in federated learning arise from the distributed nature of the model training process, introducing potential vulnerabilities in preserving the confidentiality of sensitive data.

- Data Privacy Regulations: Compliance with regulations such as GDPR is crucial to ensure the lawful processing of personal data.

- Differential Privacy Techniques: Employing techniques like adding noise to gradients can enhance privacy by preventing the extraction of individual data points.

- Homomorphic Encryption: Utilizing this encryption method allows for computations on encrypted data without exposing the raw information.

- Secure Aggregation Protocols: Implementing secure aggregation helps in aggregating model updates from multiple devices while maintaining data privacy.

Security Measures Needed

The implementation of robust security measures is imperative to address the privacy concerns inherent in federated learning, safeguarding sensitive data during the collaborative model training process.

Threat detection plays a crucial role in identifying and mitigating potential risks to the privacy of data shared across devices. By implementing advanced threat detection mechanisms, such as anomaly detection algorithms and intrusion detection systems, organizations can proactively monitor and respond to any unauthorized access attempts or malicious activities within the federated learning environment.

Additionally, ensuring robust network security is essential to prevent unauthorized access to data transmitted between devices participating in the federated learning process. Employing encryption protocols and secure communication channels can help fortify the network infrastructure and protect the privacy of sensitive data during model training.

Security Measures in Federated Learning

Implementing robust encryption protocols is crucial to safeguarding sensitive data in Federated Learning environments. When it comes to securing Federated Learning systems, several key security measures must be considered:

- End-to-End Encryption: Utilizing end-to-end encryption techniques ensures that data remains secure throughout the entire training process. This prevents unauthorized access to sensitive information both during transmission and at rest.

- Secure Aggregation Protocols: Implementing secure aggregation protocols is essential to protect the aggregated model updates sent by the participating devices. These protocols ensure that the model aggregation process is performed securely without leaking individual device-specific information.

- Privacy-Preserving Techniques: Employing privacy-preserving techniques such as differential privacy can help enhance the security of Federated Learning systems by adding noise to the model updates. This technique helps protect the privacy of individual data contributors.

- Regular Security Audits: Conducting regular security audits and assessments can help in identifying vulnerabilities and ensuring that the Federated Learning system is compliant with the latest security standards. These audits can also aid in threat detection and maintaining robust access control mechanisms.

Scalability of Federated Learning

To ensure the efficient expansion and performance optimization of Federated Learning systems, scalability considerations play a pivotal role in accommodating diverse network sizes and complexities. Scalability challenges in Federated Learning arise due to the need to coordinate a large number of devices, each with its unique data distribution and processing capabilities. As the network grows, the system must handle increased communication overhead, potential stragglers, and varying computational resources across devices.

Performance optimization is crucial in addressing scalability challenges. One approach is to implement efficient aggregation strategies to reduce communication costs. Techniques like hierarchical aggregation, where groups of devices collaborate before sending updates to the central server, can alleviate the burden on the network. Moreover, employing adaptive learning rates based on device capabilities can enhance convergence speed and overall performance.

Another key aspect of scalability is the ability to handle dynamic network conditions. Federated Learning systems must adapt to changes in device availability, network bandwidth, and data distribution. Strategies such as dynamic resource allocation and model compression can help mitigate the impact of fluctuations in the network environment.

Efficiency of Federated Learning

Efficiency in Federated Learning hinges on the optimization of communication protocols and data aggregation methods to enhance overall system performance. To improve communication and resource efficiency in Federated Learning, consider the following key aspects:

- Reduced Communication Overhead: Minimizing the amount of data exchanged between the central server and the participating devices is crucial for efficient federated learning. By transmitting only model updates rather than raw data, communication costs can be significantly reduced.

- Localized Computations: Implementing algorithms that allow for more computations to be performed locally on each device can enhance efficiency. This approach reduces the need for extensive communication during the training process.

- Dynamic Resource Allocation: Efficient federated learning systems dynamically allocate computational resources based on the complexity of the local models and the available device resources. This adaptive approach ensures optimal resource utilization.

- Optimized Aggregation Methods: Employing advanced aggregation techniques such as weighted averaging or quantization can improve the efficiency of model aggregation. These methods help in combining model updates from multiple devices effectively while reducing communication overhead.

Federated Learning Vs. Traditional Machine Learning

When comparing Federated Learning to Traditional Machine Learning, you'll notice a shift towards decentralized model training. This method allows models to be trained across multiple devices or servers without the need to centralize data.

Furthermore, by focusing on privacy-preserving data collaboration, Federated Learning addresses the challenges associated with sharing sensitive information in traditional machine learning approaches.

Decentralized Model Training

Decentralized model training in federated learning presents a paradigm shift from traditional machine learning approaches, offering enhanced privacy and scalability.

- Decentralized Optimization: Each edge device performs local model updates independently, optimizing its own parameters.

- Model Synchronization: Aggregated updates from multiple devices are synchronized to create a global model without centralizing data.

- Efficient Scalability: The decentralized nature allows for training on a vast number of devices simultaneously, reducing training time.

- Enhanced Privacy: Data remains on local devices, only model updates are shared, ensuring sensitive information stays secure.

Privacy-Preserving Data Collaboration

Transitioning from decentralized model training, the comparison between Privacy-Preserving Data Collaboration in Federated Learning and Traditional Machine Learning reveals significant distinctions in data handling and security measures. In Federated Learning, data encryption and secure transmission protocols are employed to safeguard sensitive information during model training across multiple devices. Trust establishment mechanisms are crucial in ensuring the integrity of the collaborative process, with user consent playing a pivotal role in determining data access permissions.

This approach contrasts with Traditional Machine Learning, where data is typically centralized, posing higher risks of privacy breaches. By prioritizing privacy-preserving techniques such as encryption and secure communication channels, Federated Learning mitigates privacy concerns and enhances the overall security posture of collaborative model training efforts.

Future Trends in Federated Learning

As the field of federated learning continues to evolve rapidly, emerging trends suggest a shift towards enhanced privacy-preserving techniques and scalability solutions.

Future Trends in Federated Learning:

- Enhanced Privacy-Preserving Techniques:

The future of federated learning will see advancements in privacy-preserving techniques such as secure aggregation methods and differential privacy mechanisms. These enhancements aim to protect sensitive data during the model training process across decentralized devices.

- Scalability Solutions:

Addressing the scalability challenge is crucial for widespread adoption. Future trends indicate a focus on developing more efficient communication protocols and model optimization strategies to handle large-scale federated learning systems effectively.

- Interoperability Standards:

To promote federated learning adoption, the establishment of common interoperability standards is essential. Future developments may include standardized communication interfaces and protocols to facilitate seamless collaboration among diverse devices and platforms.

- Edge Computing Integration:

Leveraging edge computing technologies in federated learning frameworks is anticipated to grow. This integration can lead to reduced latency, improved model inference speed, and enhanced data security at the edge devices, paving the way for more efficient and reliable federated learning systems.

Federated Learning Algorithms

Utilizing advanced optimization techniques can significantly enhance the performance and efficiency of federated learning algorithms. When it comes to federated learning algorithms, the key focus lies in optimizing the models that are trained across decentralized devices or servers while preserving data privacy and security. Various optimization methods are employed to ensure that these algorithms perform efficiently in a distributed environment.

One essential aspect of federated learning algorithms is model optimization. This involves updating the global model using local model updates from participating devices or servers, ensuring that the model achieves convergence while maintaining data privacy. Techniques such as Federated Averaging, which aggregates model updates with appropriate weighting, and Federated Learning with Momentum, which incorporates momentum into the aggregation process, are commonly used to enhance the optimization process.

Additionally, federated learning algorithms often leverage advanced optimization algorithms like Federated Proximal, which introduces a proximal term to the optimization objective to promote model regularization. By incorporating such techniques, federated learning algorithms can overcome challenges such as non-iid data distributions and communication bottlenecks, ultimately improving the overall performance and convergence speed of the models trained in a federated manner.

Data Aggregation in Federated Learning

To efficiently carry out federated learning, the process of aggregating data plays a crucial role in consolidating information from multiple decentralized sources for model training. Data aggregation techniques and privacy-preserving methods are essential aspects of federated learning to ensure the security and confidentiality of sensitive data.

When aggregating data in federated learning, consider the following:

- Data Aggregation Techniques: Utilize methods such as weighted averaging, secure aggregation, and differential privacy to combine model updates from various devices while protecting individual user data. These techniques help in aggregating information without compromising the privacy of each participant.

- Privacy Preserving Methods: Implement cryptographic protocols like homomorphic encryption or secure multi-party computation to enable secure aggregation of model updates. These techniques allow the central server to aggregate encrypted model parameters without accessing the raw data, enhancing data privacy in federated learning scenarios.

- Federated Learning Challenges: Address challenges related to communication efficiency, stragglers, and non-IID data distribution during the aggregation process. Employ strategies like hierarchical aggregation, adaptive learning rates, and model compression to overcome these challenges and improve the overall performance of federated learning systems.

- Optimization Strategies: Optimize the aggregation process by adjusting hyperparameters, tuning aggregation algorithms, and incorporating techniques like client sampling to enhance convergence speed and model accuracy in federated learning setups. By employing efficient optimization strategies, federated learning models can achieve better performance while maintaining data privacy and security.

Federated Learning Frameworks

In exploring Federated Learning Frameworks, one crucial aspect to consider is the selection of appropriate platforms that facilitate efficient model training across decentralized devices. Framework comparison plays a pivotal role in determining the most suitable architecture for federated learning systems. Various frameworks like TensorFlow Federated, PySyft, and Flower offer different features and capabilities, making it essential to assess their strengths and weaknesses for specific use cases.

Model optimization is a key factor in federated learning frameworks. Techniques such as federated averaging and secure aggregation are commonly employed to enhance model performance and maintain data privacy. Evaluating how well these optimization methods integrate with different frameworks is crucial for achieving successful federated learning outcomes.

Resource management is another critical consideration when choosing a federated learning framework. Effective resource allocation and utilization can significantly impact the efficiency and scalability of model training across distributed devices. Frameworks that offer robust resource management capabilities can streamline the training process and improve overall system performance.

Performance evaluation is essential in assessing the effectiveness of federated learning frameworks. Metrics such as communication efficiency, convergence speed, and model accuracy are commonly used to evaluate the performance of federated models. Choosing a framework that aligns with performance requirements is vital for achieving optimal results in federated learning scenarios.

Federated Learning Use Cases

Exploring the practical applications of federated learning across diverse industries reveals its potential to revolutionize collaborative model training strategies. When considering federated learning applications and industry adoption, several key use cases stand out:

- Healthcare: In the healthcare sector, federated learning allows medical institutions to collaboratively train machine learning models on sensitive patient data without centralizing it. This ensures privacy compliance while enabling the development of robust predictive models for personalized medicine and disease diagnosis.

- Finance: Financial institutions leverage federated learning to analyze customer behavior, detect fraudulent activities, and improve risk assessment models. By training models across multiple branches without sharing raw data, banks enhance customer data protection and regulatory compliance.

- Manufacturing: In manufacturing, federated learning enables different factories within a company to jointly train AI models for quality control, predictive maintenance, and process optimization. This distributed learning approach enhances operational efficiency while safeguarding proprietary manufacturing processes.

- Telecommunications: Telecom companies use federated learning to enhance network optimization, predict equipment failures, and improve customer experience. By aggregating insights from various local networks without compromising data security, telecom providers can deliver better services while maintaining data privacy standards.

Conclusion

So there you have it, the fascinating world of federated learning laid out before you.

Despite its challenges, the benefits and potential applications of this innovative approach to machine learning are undeniable.

From data privacy to scalability, federated learning offers a unique solution to many industry problems.

So next time you hear about federated learning, remember the power it holds in revolutionizing the way we approach data sharing and model training.

Stay curious and keep exploring this exciting field!