Ethical Considerations in the Development and Deployment of Conversational AI

The development and deployment of conversational AI raise important ethical questions and considerations. Companies working with conversational AI have a moral responsibility to use these technologies in a way that is not harmful to others. Neglecting ethical factors can jeopardize the success of the project and result in financial losses and reputational damage. Governments and international organizations are also working on developing regulations to ensure the ethical use of AI technologies. Privacy, transparency, bias, fairness, and accountability are among the key ethical guidelines that impact conversational AI projects.

Key Takeaways:

- Conversational AI development and deployment require careful ethical considerations.

- Companies must prioritize privacy, transparency, bias, fairness, and accountability.

- Neglecting ethical factors can lead to financial losses and reputational damage.

- Governments and international organizations are working on developing regulations.

- Ethical practices contribute to a trustworthy and socially responsible AI ecosystem.

Privacy in Conversational AI

The protection of privacy is a critical ethical consideration in the development and implementation of conversational AI systems. With these systems often handling Personally Identifiable Information (PII), companies must prioritize data protection and security to ensure user trust and compliance with regulations such as the General Data Protection Regulation (GDPR) in the European Union.

Obtaining explicit user consent is a key requirement for handling personal data in conversational AI. Companies must clearly communicate to users how their data will be used and obtain their consent before collecting any information.

Strict data security measures are essential throughout the entire lifecycle of conversational AI systems. This includes secure processing, storage, and transmission of data, as well as ensuring that data is made available only to authorized individuals or organizations. Encryption, access controls, and regular security audits are crucial elements to safeguard user data from unauthorized access or breaches.

To ensure GDPR compliance and protect user privacy, conversational AI platforms often include explicit requests for user consent during interactions. Transparency in explaining the purpose, scope, and potential risks associated with data collection is key to building user trust.

In addition to user consent, companies also display links to their privacy policies, enabling users to access detailed information about how their data is collected, used, and managed. These privacy policies outline the data handling practices and user rights in line with applicable laws and regulations.

By prioritizing privacy and implementing robust data protection measures, companies can demonstrate their commitment to responsible AI usage and build trust with users.

Data protection measures in Conversational AI

| Privacy Measures | Description |

|---|---|

| User Consent | Obtain explicit consent from users before collecting and processing their personal data. |

| Secure Processing | Implement encryption and security measures to protect data during processing. |

| Data Storage | Store user data securely and ensure access is restricted to authorized personnel. |

| Data Transmission | Securely transmit data to prevent interception or unauthorized access. |

| Privacy Policies | Provide clear and accessible privacy policies to inform users about data handling practices. |

Ensuring privacy in conversational AI is crucial for maintaining user trust, complying with regulations, and protecting sensitive personal information. By implementing privacy-focused measures, companies can build a strong foundation for ethical and responsible AI usage.

Transparency in Conversational AI

Transparency plays a crucial role in the development and deployment of conversational AI. It ensures that end users fully understand the capabilities and limitations of the AI system, fostering a sense of trust and confidence in the technology. By providing users with insights into how the AI system makes decisions and what it can and cannot do, companies can establish clear expectations and avoid misunderstandings.

One important aspect of transparency is the disclosure of whether the user is interacting with a bot or a human, especially in contexts where sensitive topics are discussed. This disclosure helps users understand the nature of the conversation and manage their expectations accordingly. Companies can achieve transparency by using disclosure messages that clearly indicate the involvement of AI and by openly communicating the AI system’s limitations.

“Transparency is the foundation for building trust and ensuring that users have a positive experience with conversational AI.”

When users have a clear understanding of the decision-making process behind conversational AI, they can make informed choices and adjust their behavior accordingly. This transparency also helps companies address user concerns, providing explanations for certain responses and building a stronger relationship with the user base. By being transparent and honest about the limitations of the technology, companies can manage user expectations and prevent potential frustrations or misunderstandings.

Building trust through transparency in conversational AI helps foster long-term user loyalty and encourages users to confidently engage with the technology.

Moreover, transparency is not only essential for user understanding but also for regulatory compliance. In various industries, including finance and healthcare, regulations require companies to disclose any AI involvement in customer interactions. Transparent communication ensures compliance with these regulations and helps maintain ethical standards in the deployment of conversational AI.

Benefits of Transparency in Conversational AI:

- Builds trust and confidence in AI technology

- Helps users make informed decisions and adjust behavior

- Addresses user concerns and provides explanations for responses

- Prevents frustrations and misunderstandings

- Demonstrates regulatory compliance

Bias in Conversational AI

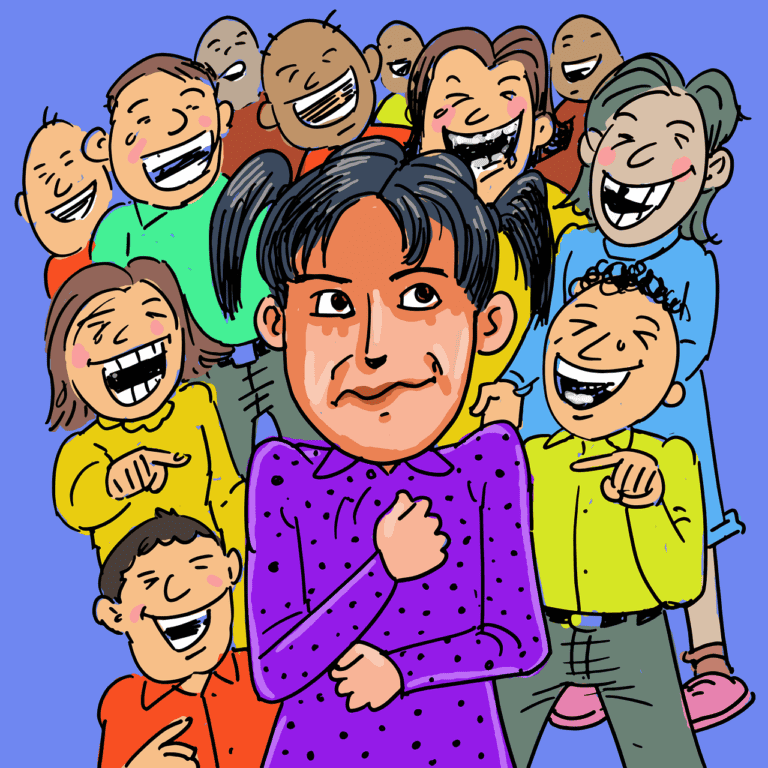

Bias is a significant ethical concern in conversational AI. AI systems learn from real examples of human language, which can result in biased responses based on gender, religion, ethnicity, or political affiliation.

It is crucial for companies to ensure that their training data is well-rounded and representative of society. By using diverse data sets, AI models can learn from a wide range of sources, minimizing the risk of algorithmic bias.

When training conversational AI models, representativeness is key. This means including data from various demographics, cultures, and backgrounds to obtain a more accurate understanding of human language and avoid perpetuating biases.

To address bias in conversational AI, companies should actively manage and analyze their training data. This involves identifying biases, adjusting the training algorithms, and iteratively improving the model’s responses to reduce biased outputs.

User feedback plays a crucial role in mitigating biased responses. By actively collecting and analyzing user feedback on the system’s outputs, companies can identify and address instances of bias, preventing the reinforcement of biased behavior.

It is essential for companies to acknowledge and address bias in conversational AI to ensure fairness, inclusivity, and equal treatment for all users.

By prioritizing fairness in AI development and deployment, companies can build trust with users and promote ethical practices in the field of AI. Fairness should be an integral part of the AI development life cycle, from the collection of training data to the continuous improvement of the AI system.

Example of biased data representation:

| Demographic | Percentage of Representation in Training Data |

|---|---|

| Gender: Male | 80% |

| Gender: Female | 20% |

| Ethnicity: White | 90% |

| Ethnicity: Other | 10% |

As seen in the example table, biased representation in the training data can result in the AI system showing a preference for certain demographics, leading to unfair and biased responses.

Addressing bias in conversational AI is crucial for fairness in AI applications. By ensuring adequate representation and diverse training data sets, companies can minimize the risk of algorithmic bias and promote ethical practices in the development and deployment of conversational AI.

Fairness in Conversational AI

Fairness is a critical consideration in the development and deployment of conversational AI systems. It is essential for companies to ensure that their AI systems treat all users equally and avoid any traces of discrimination or exclusion. To achieve fairness, companies must analyze their training data to detect and address biases that may exist. This includes biases based on gender, race, religion, or other protected characteristics.

It is also crucial for AI systems to provide inclusive and non-toxic responses. This means avoiding responses that promote hate speech, offensive language, or stereotypes. By maintaining a high standard of fairness, companies can create AI systems that provide a positive user experience for all users.

In addition to equal treatment, accessibility is another important aspect of fairness in conversational AI. Companies should make their AI systems accessible to all users, including those with disabilities or specific needs. This can be achieved by providing different interaction alternatives, such as voice input, text input, or touch input. Companies should also consider providing content in various formats, such as audio, transcripts, or visual cues, to cater to different user preferences and accessibility requirements.

Ensuring Fairness in Conversational AI

- Analyze training data: Companies should thoroughly examine their training data to identify any biases that may exist.

- Address biases: Once biases are identified, companies must take the necessary steps to address and mitigate them. This may involve retraining models or introducing techniques to enhance fairness in AI responses.

- Diverse data sets: To ensure fairness, it is crucial to use diverse data sets that accurately represent the demographics and experiences of the user population.

- Testing and auditing: Regular testing and auditing of AI systems can help identify and rectify any fairness issues that arise.

By prioritizing fairness in conversational AI, companies can promote equal treatment, enhance user adoption, and contribute to a more inclusive and equitable AI ecosystem.

Companies must ensure that their AI systems treat all users equally and avoid any traces of discrimination or exclusion. To achieve fairness, companies must analyze their training data to detect and address biases that may exist.

Accountability in Conversational AI

Accountability is a fundamental ethical principle in the development and deployment of conversational AI. As AI systems continue to advance and become more autonomous, it is crucial for companies to be able to explain the processes, actions, and reasons behind an AI system’s specific responses.

“The moral and ethical responsibility lies with organizations to ensure transparency and accountability in conversational AI, especially as these systems become more integrated into our daily lives.” – Samantha Thompson, AI Ethics Expert

Companies must establish clear processes and controls to ensure the quality, reliability, and traceability of their AI systems. This involves defining guidelines and protocols for the training, testing, and deployment phases of conversational AI projects.

One of the challenges in achieving accountability lies in the rise of generative AI. As AI systems increasingly generate content, debates surrounding ownership and intellectual property rights for AI-generated content have emerged. Companies must navigate these complexities and establish frameworks to determine the ownership and responsible usage of AI-generated content.

Ensuring accountability in conversational AI is not only an ethical imperative but also a means to mitigate risks. It helps to maintain user trust, protect against biases or discriminatory outputs, and uphold ethical standards throughout the AI development and deployment process.

Benefits of Accountability in Conversational AI:

- Promotes transparent and responsible AI practices

- Builds trust with users by explaining decision-making processes

- Allows for the identification and mitigation of biases and discriminatory outputs

- Enables high-quality, reliable, and traceable AI system performance

- Engenders responsible and ethical usage of AI-generated content

Companies that prioritize accountability in conversational AI demonstrate a commitment to ethical development and deployment practices. By fostering transparency and understanding, these organizations can build trust with users, mitigate risks, and contribute to the responsible advancement of AI technologies.

The Importance of Ethical Guidelines in Conversational AI

Ethical guidelines are integral to the successful development and deployment of conversational AI systems. By incorporating responsible AI practices into their processes, companies can fulfill their moral responsibilities while safeguarding their reputation and avoiding financial losses. Governments and international organizations recognize the significance of ethical AI use and are actively working on regulations to enforce compliance.

Adhering to ethical guidelines is not only a moral obligation but also a strategic decision. It ensures that conversational AI technology is deployed responsibly, mitigating the risks associated with biased algorithms, privacy violations, and discriminatory practices. Companies that prioritize ethics in their AI initiatives build trust with users and the broader society, fostering long-term loyalty and positive brand perception.

Responsible AI Practices

Embedding ethical guidelines into AI development and deployment practices is essential. These guidelines should govern the entire lifecycle of conversational AI systems, from data collection and model training to system outputs and user interactions. Responsible AI practices include:

- Conducting thorough audits of training data to identify and mitigate biases.

- Ensuring transparency and providing users with clear information about AI system capabilities and limitations.

- Obtaining informed consent from users for data collection and processing.

- Regularly monitoring and analyzing AI system outputs to detect and address any potential ethical issues.

- Establishing mechanisms for user feedback and addressing concerns promptly and transparently.

Moral Responsibilities

Developers and organizations have moral responsibilities when it comes to conversational AI. They must prioritize the well-being and rights of individuals and communities who interact with their AI systems. Ethical considerations extend beyond legal compliance and encompass the ethical dimensions of AI development and deployment.

By embracing ethical guidelines, developers and organizations can navigate complex moral dilemmas and ensure that the societal impact of conversational AI remains positive. Ethical responsibility includes addressing biases, promoting fairness, respecting user privacy, and being accountable for the actions and decisions made by AI systems.

The Impacts of Neglecting Ethical Guidelines

Neglecting ethical guidelines in conversational AI can have severe consequences for companies. Financial losses can occur due to reputational damage resulting from ethical misconduct. Public trust and customer loyalty are easily eroded when AI systems demonstrate biases, discrimination, or privacy violations.

Moreover, legal and regulatory penalties can be imposed on companies that fail to adhere to ethical guidelines in AI development and deployment. Governments and international bodies are actively monitoring and regulating AI applications to protect individuals and society at large.

Regulations and Enforcement

Recognizing the significance of ethical AI use, governments and international organizations are actively working on regulations to enforce responsible AI practices. While the specifics may vary by jurisdiction, the focus remains on safeguarding individual rights, promoting fairness, and ensuring transparency.

Companies must stay informed about evolving regulatory frameworks and ensure compliance with ethical guidelines specific to their industry and geographical location. This not only minimizes legal risks but also demonstrates a commitment to responsible and accountable AI development and deployment.

Building Trust and Loyalty through Ethical Conversational AI

Ethical conversational AI practices play a vital role in building trust and loyalty among customers while enhancing a brand’s reputation.

As customers increasingly interact with AI technologies, they are finding these interactions to be trustworthy and reliable, leading to heightened customer trust. Additionally, employees view AI as a valuable co-worker, further cementing the importance of responsible AI usage in fostering trust within organizations.

When conversational AI is utilized ethically and responsibly, it has a profound impact on decision-making, customer service, and overall brand performance, ultimately resulting in increased revenue and customer satisfaction.

Brands that prioritize responsible AI usage create a customer-centric environment, ensuring that user needs are met and exceeded. By prioritizing trust and loyalty through ethical conversational AI practices, businesses can establish long-term relationships with customers, leading to increased brand loyalty and advocacy.

In summary, building trust and loyalty through ethical conversational AI practices not only improves customer experiences but also has a direct impact on a brand’s reputation and revenue. With responsible AI usage, companies can create an environment that fosters trust, drives customer loyalty, and maximizes the potential of conversational AI technology.

The Growth of Conversational AI and its Ethical Implications

Conversational AI is rapidly expanding and finding its way into various sectors of society. This growth brings about numerous benefits, such as improved healthcare and enhanced educational experiences. However, along with these advancements, it is crucial to address the ethical implications that arise from the development and deployment of conversational AI.

One of the primary ethical concerns is the potential perpetuation of inequality. As conversational AI becomes more prevalent, there is a risk of it exacerbating existing social and economic disparities. Therefore, careful consideration must be given to ensure that AI technology is developed and utilized in a way that promotes inclusivity and equal opportunities.

Another significant ethical concern is the violation of privacy. Conversational AI systems often handle sensitive personal information, creating the need for robust data protection measures. Companies and organizations must prioritize data security and comply with relevant privacy regulations to safeguard user privacy and prevent unauthorized access or misuse of personal data.

Additionally, accountability is a crucial aspect of ethical AI deployment. As AI systems become more autonomous, it is important to establish mechanisms for tracing their actions and holding them accountable for their decision-making processes. This fosters transparency, ensures responsible AI usage, and helps prevent potential harm arising from the unchecked actions of AI technologies.

Addressing Ethical Concerns in Conversational AI

To address these ethical concerns effectively, a multi-faceted approach is required. This includes:

- Implementing clear guidelines and regulations for the development and deployment of conversational AI to promote responsible and transparent practices.

- Conducting thorough assessments of the potential societal impact and ethical implications of AI systems before deployment.

- Ensuring diversity and representativeness in the training data used to mitigate biases and prevent discriminatory responses.

- Providing transparency to users regarding the capabilities, limitations, and decision-making processes of conversational AI systems.

- Establishing mechanisms for user feedback and accountability, allowing users to report issues or challenge AI-generated outcomes.

Conversational AI has the potential for immense societal benefits, but ethical considerations must be at the forefront of its development and deployment to ensure a fair, safe, and trustworthy AI ecosystem.

By addressing the ethical concerns surrounding conversational AI, we can harness its growth to empower individuals and communities, improve service delivery, and create a more equitable and inclusive future.

| Benefit | Ethical Concern |

|---|---|

| Improved healthcare | Privacy violations |

| Enhanced education | Inequality |

| Efficient customer service | Accountability |

Key Ethical Considerations of Conversational AI

When developing and deploying conversational AI systems, organizations must prioritize key ethical considerations. These considerations play a crucial role in ensuring responsible and successful implementation. In this section, we will explore the defined goals, differentiation of capabilities, transparency, and alternatives to AI that organizations need to keep in mind.

Defined Goals

One of the fundamental ethical considerations in conversational AI is having clear and well-defined goals. Organizations should have a deep understanding of why they are using chatbots and virtual assistants and how they align with the needs and expectations of their users. By setting defined goals, organizations can ensure that their conversational AI systems are purposeful and focused, leading to a more meaningful user experience.

Differentiation of Capabilities

In order to provide valuable and effective interactions, conversational AI systems need to be equipped with the right capabilities. Organizations must carefully differentiate the capabilities of their chatbots and virtual assistants, ensuring that they align with user expectations and needs. By focusing on enhancing the technology’s capabilities, organizations can deliver more accurate and personalized conversational experiences.

Transparency

Transparency is a critical aspect of ethical conversational AI. Users need to understand how chatbots and virtual assistants operate and what data is being collected and used. Organizations should communicate the goals, capabilities, and data usage policies of their conversational AI systems clearly to build trust with users. Transparent communication helps users make well-informed decisions and feel confident about their interactions.

Alternatives to AI

While conversational AI brings numerous benefits, organizations should also provide alternatives to AI-driven interactions. This allows users to choose the mode of engagement that best suits their preferences or needs. Providing options such as human support or monitored messaging can ensure inclusivity and address the preferences of different user generations. By offering alternatives, organizations can enhance the overall user experience and accommodate diverse user needs.

The Key Ethical Considerations of Conversational AI

| Ethical Considerations | Description |

|---|---|

| Defined Goals | Organizations must have clear goals for using chatbots and virtual assistants, aligning them with user needs and expectations. |

| Differentiation of Capabilities | Conversational AI systems should be differentiated based on their capabilities to provide personalized and valuable interactions. |

| Transparency | Clear communication about the goals, capabilities, and data usage policies of conversational AI systems to build trust with users. |

| Alternatives to AI | Providing options such as human support or monitored messaging to accommodate different user preferences or needs. |

By considering these key ethical aspects, organizations can ensure responsible and user-centric conversational AI implementations. Defined goals, differentiated capabilities, transparency, and alternatives to AI contribute to a more ethical and trustworthy conversational AI ecosystem.

Conclusion

Ethical considerations play a critical role in the development and deployment of conversational AI. It is essential for organizations to prioritize responsible AI development and deployment to build trust with users and minimize risks. By incorporating ethical principles into their conversational AI projects, companies can enhance user experiences and contribute to a more trustworthy and morally conscious AI ecosystem.

Privacy is a significant ethical consideration in conversational AI, as companies must ensure that user data is protected, and consent is obtained. Transparency is another key guideline, as users should have a clear understanding of how AI systems make decisions and their limitations. Fairness is crucial to avoid biases and discrimination in AI responses, while accountability ensures organizations can explain their AI systems’ actions and reasons.

By embracing ethical considerations, organizations can address the societal impact of AI and prevent potential negative consequences. Responsible AI development and deployment not only foster trust but also uphold the moral responsibilities of using AI technologies. Adhering to ethical guidelines in conversational AI contributes to the overall success and positive societal impact of AI.