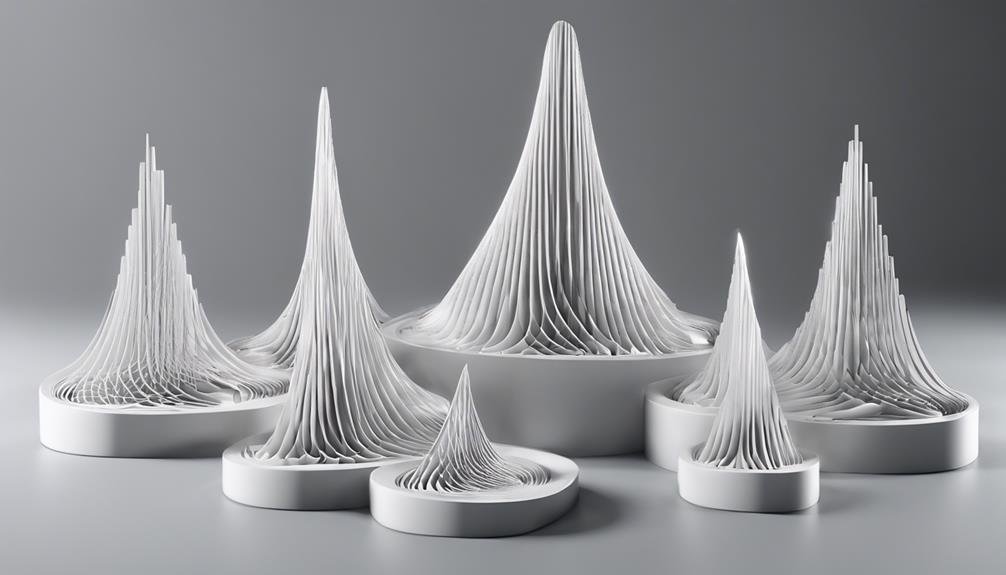

Central Limit Theorem (CLT): Definition and Key Characteristics

The Central Limit Theorem (CLT) is essential in statistics, showing how sample means gravitate towards a normal distribution with larger sample sizes, enhancing statistical precision. Understanding this theorem is vital for accurate inferences based on sample data. Importantly, sample means align with the population mean as the sample size grows, boosting the reliability of predictions. Delving further into the Central Limit Theorem reveals its historical significance, distribution properties, and considerations for sample sizes, important for fields like finance and correlation analysis. Mastering the CLT can open doors to deeper insights into statistical methodologies and their application.

Key Takeaways

- CLT describes how sample means approximate a normal distribution with increasing sample size.

- Sample mean converges to population mean with larger sample sizes for more accurate predictions.

- Historical development by de Moivre and Pólya contributed to the formalization of CLT.

- Distribution of sample means tends towards normality assumptions as sample size grows.

- Adequate sample sizes are crucial for accurate estimation in statistical analysis, especially in finance.

CLT Definition

The Central Limit Theorem (CLT) is a fundamental statistical principle that describes the distribution of sample means as approximating a normal distribution with increasing sample size.

This theorem is essential in statistical inference as it allows for the understanding of how sample distributions behave as sample sizes grow.

As the sample size increases, the sample mean converges towards the population mean, enabling more accurate statistical predictions.

The CLT plays an important role in statistical analysis by simplifying complex calculations and ensuring that inferences drawn from sample data are representative of the population.

Mean Approximation

As sample sizes increase, the convergence of sample means towards the population mean becomes increasingly significant, facilitating more precise statistical estimations. This phenomenon enhances mean estimation accuracy and improves sampling accuracy. Larger sample sizes lead to a reduction in sampling variability, allowing for a more reliable approximation of the true population mean.

Historical Development

Through a chronological lens, the evolution of the Central Limit Theorem (CLT) reveals a rich history of mathematical advancements and theoretical insights. Abraham de Moivre first developed the concept in 1733, laying the foundation for understanding sample means and their distributions. Later, in 1920, George Pólya formalized the theorem, providing a more structured and all-encompassing framework for its application in statistics. Below is a table highlighting the key contributors to the historical development of the Central Limit Theorem:

| Year | Contributor | Contribution |

|---|---|---|

| 1733 | Abraham de Moivre | Developed initial concepts of CLT |

| 1920 | George Pólya | Formalized the Central Limit Theorem |

Distribution Characteristics

In exploring the Distribution Characteristics of the Central Limit Theorem (CLT), it is essential to understand how sample means exhibit specific statistical properties as sample size increases.

Sampling variability analysis reveals that as sample size grows, the distribution of sample means tends towards normality assumptions. This shift in distribution shapes towards normality is vital for statistical inference, as it allows for more reliable predictions about population parameters based on sample data.

Understanding these distribution characteristics is fundamental in ensuring the validity of statistical conclusions drawn from samples and highlights the importance of sample size in the application of the CLT.

Sample Size Considerations

An essential aspect of statistical analysis when applying the Central Limit Theorem is carefully considering the appropriate sample size. Sample size implications are vital in statistical analysis, especially in fields like finance where portfolio risk and correlation analysis play a significant role.

In portfolio risk assessment, sampling at least 30-50 stocks across various sectors is recommended to guarantee a representative analysis. Adequate sample sizes enable a more accurate estimation of risks and correlations within a portfolio.

Similarly, in correlation analysis, having a sufficient sample size is essential to draw reliable conclusions about the relationships between variables. Hence, understanding the impact of sample size on statistical analysis is fundamental in ensuring robust and meaningful results in various analytical contexts.

Population Mean Relationship

Exemplifying the fundamental essence of statistical relationships, the connection between the population mean and sample means elucidates the pivotal role of the Central Limit Theorem in statistical inference. This relationship accuracy hinges on the impact of sample sizes, as larger samples tend to mirror the population mean more closely.

As the sample size increases, the sample mean becomes a more accurate estimator of the population mean. Hence, the Central Limit Theorem's assertion that the mean of sample means approximates the population mean underscores the significance of sample impact on statistical analysis.

Understanding this relationship is essential for drawing valid inferences and making predictions based on sample data in various fields of study.

Accuracy and Predictions

How does the Central Limit Theorem contribute to the precision of statistical analysis and the accuracy of predictions?

The Central Limit Theorem (CLT) plays an essential role in enhancing prediction accuracy and ensuring model validity through its handling of sampling variability and statistical inference. By approximating sample means to a normal distribution as sample size increases, the CLT allows for more accurate predictions of population characteristics.

This theorem is instrumental in statistical analysis by enabling researchers to draw inferences about a population based on sample data, thereby ensuring the validity of statistical conclusions. The CLT's application leads to more precise predictions and enhances the reliability of models, making it a cornerstone in the field of statistical analysis.

Conclusion

To sum up, the Central Limit Theorem serves as a guiding light in the vast landscape of statistical theory, illuminating the path towards accurate analyses and reliable conclusions.

Like a steady compass in a turbulent sea, the CLT navigates researchers through the complexities of sample means and population parameters, ensuring a solid foundation for statistical inference.

Its significance cannot be overstated, acting as a beacon of clarity and precision in the domain of data analysis.